Deviance is a number that measures the goodness of fit of a logistic regression model. Think of it as the distance from the perfect fit — a measure of how much your logistic regression model deviates from an ideal model that perfectly fits the data.

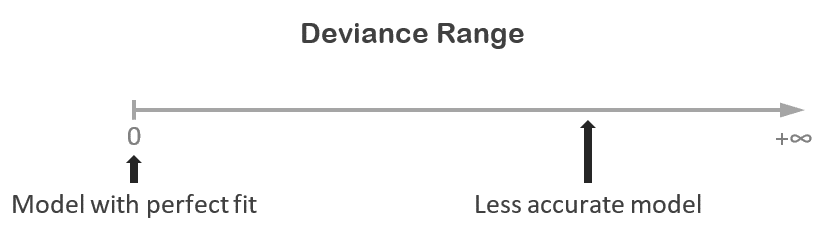

Deviance ranges from 0 to infinity.

The smaller the number the better the model fits the sample data (deviance = 0 means that the logistic regression model describes the data perfectly). Higher values of the deviance correspond to a less accurate model.

What is a good value for the deviance?

In general, the lower the deviance the better but there is no threshold for an acceptable value.

The deviance is not meant to be interpreted on its own, instead you can use it to compare your logistic regression model to either:

- A reference model

- Another model that includes either a larger or smaller subset of predictors

1. Comparison with a reference model

You can compare the deviance of your logistic regression model with that of a reference/null model with no independent variables (including only the intercept).

This reference model is the one that predicts the average of the outcome Y for all observations.

Since your model includes some predictors, we would expect it to fit the data better than the reference model i.e. to have a lower deviance.

The size of the difference between the deviance of your model and that of the reference reflects how important the independent variables are in your model.

2. Comparison with a model of different size

You can also compare the deviance of your model with that of another one that includes, for example, 1 additional independent variable.

The goal is to get a sense of the importance of this additional predictor.

The lower the deviance of the larger model is (compared to the smaller model), the more important this variable is.

Should you always prefer models with lower deviance?

Not necessarily.

In fact the model with the lowest deviance will certainly represent the sample data better than any other model. But the problem is that it may not generalize well.

In this case we say that the model is overfitting the sample data.

So optimizing for the smallest deviance on the sample data does not guarantee a small deviance on out-of-sample data.

Why not?

The problem is that the model with more predictors will ALWAYS have a lower deviance than the smaller model — i.e. you cannot lose accuracy by adding more variables (in the worst case, if the additional variables were not important at all, the model can always set their coefficients equal to 0).

So if you choose the model with the lowest deviance, you will always end up picking a model that includes all the predictors under consideration.

This is not desirable because not all the independent variables will be good predictors of the outcome. Some will seem good predictors just by chance (in fact, for each 20 predictors included in the model, 1 will have a p-value < 0.05 just by chance).

Therefore, if you want to compare models of different sizes by using the deviance, you will also need to adjust for the number of predictors.

One way of doing this is by using AIC (Akaike’s Information Criterion) which is based on the deviance but also penalizes more complex models.

How the deviance is used in practice

Here are 3 use cases for the deviance:

1. Deviance is used under the hood in calculating the logistic regression model coefficients

Deviance is the equivalent of the sum of squared errors in linear regression.

When you run a linear regression, the model coefficients are calculated by minimizing the sum of squared residuals. Likewise, when you run a logistic regression, the model coefficients are calculated by minimizing the deviance.

2. Deviance can be used to create a test for the global fit of the logistic regression model

Based on the difference between the deviance of a null model (a model without predictors) and that of a model with predictors, we can calculate a p-value to test if the independent variables provide a statistically significant improvement on the null model.

This goodness of fit test can also be used to test if the model is an improvement on any other model that contains a smaller (or larger) subset of predictors.

3. Deviance is used to calculate Nagelkerke’s R2

Much like R2 in linear regression, Nagelkerke R2 is a measure that uses the deviance to estimate how much variability is explained by the logistic regression model.

It is a number between 0 and 1.

The closer the value is to 1, the more perfectly the model explains the outcome.

References

- McElreath R. Statistical Rethinking: A Bayesian Course with Examples in R and STAN. 2nd edition. Chapman and Hall/CRC; 2020.

- Steyerberg EW. Clinical Prediction Models: A Practical Approach to Development, Validation, and Updating. 2009th Edition. Springer; 2008.

Further reading

- Interpret Logistic Regression Coefficients

- Variables to Include in a Regression Model

- 7 Tricks to Get Statistically Significant p-Values

- Residual Standard Deviation/Error: Guide for Beginners

- Why and When to Include Interactions in a Regression Model

- Coefficient of Alienation, Non-Determination and Tolerance